Machine Learning for Red Teams, Part 1

TLDR: It’s possible to detect a sandbox using a process list with machine learning.

Introduction

For attackers, aggressive collection of data often leads to the disclosure of infrastructure, initial access techniques, and malware being unceremoniously pulled apart by analysts. The application of machine learning in the defensive space has not only increased the cost of being an attacker, but has also limited a techniques’ operational life significantly. In the world that attackers currently find themselves in:

- Mass data collection and analysis is accessible to defensive software, and by extension, defensive analysts

- Machine learning is being used everywhere to accelerate defensive maturity

- Attackers are always at a disadvantage, as we as humans try to defeat auto-learning systems that use every bypass attempt to learn more about us, and predict future bypass attempts. This is especially true for public research, and static bypasses.

However, as we will present here, machine learning isn’t just for blue teams. This post will explore how attackers can make use of the little data they have to perform their own machine learning. We will present a case study that focuses on initial access. By the end of the post, we hope that you will have a better understanding of machine learning, and how we as attackers can apply machine learning for our own benefit.

A Process List As Data

Before discussing machine learning, we need to take a closer look at how we as attackers process information. I would argue that attackers gather less than 1% of the information available to them on any given host or network, and use less than 3% of the collected information to make informed decisions (don’t get too hung up on the percentages). Increasing data collection efforts in the name of machine learning would come at a cost to stealth, with no foreseeable benefit. Gathering more data isn’t the best solution for attackers; attackers need to increase their data utilization. However, increasing data utilization is difficult due to the textual nature of command output. For example, other than showing particular processes, architecture, and users, what more can the following process list really provide?

| PID | ARCH | SESS | SYSTEM NAME | OWNER | PATH |

|---|---|---|---|---|---|

| 1 | x64 | 0 | smss.exe | NT AUTHORITYSYSTEM | SystemRootSystem32smss.exe |

| 4 | x64 | 0 | csrss.exe | NT AUTHORITYSYSTEM | C:Windowssystem32csrss.exe |

| 236 | x64 | 0 | wininit.exe | NT AUTHORITYSYSTEM | C:Windowssystem32wininit.exe |

| 312 | x64 | 0 | csrss.exe | NT AUTHORITYSYSTEM | C:Windowssystem32csrss.exe |

| 348 | x64 | 1 | winlogon.exe | NT AUTHORITYSYSTEM | C:Windowssystem32winlogon.exe |

| 360 | x64 | 1 | services.exe | NT AUTHORITYSYSTEM | C:Windowssystem32services.exe |

| 400 | x64 | 0 | lsass.exe | NT AUTHORITYSYSTEM | C:Windowssystem32lsass.exe |

| 444 | x64 | 0 | lsm.exe | NT AUTHORITYSYSTEM | C:Windowssystem32lsm.exe |

| 452 | x64 | 0 | svchost.exe | NT AUTHORITYSYSTEM | C:Windowssystem32svchost.exe |

| 460 | x64 | 0 | svchost.exe | NT AUTHORITYNETWORK SERVICE | C:Windowssystem32svchost.exe |

| 564 | x64 | 0 | svchost.exe | NT AUTHORITYLOCAL SERVICE | C:Windowssystem32svchost.exe |

| 632 | x64 | 0 | svchost.exe | NT AUTHORITYSYSTEM | C:Windowssystem32svchost.exe |

| 688 | x64 | 0 | svchost.exe | NT AUTHORITYSYSTEM | C:Windowssystem32svchost.exe |

| 804 | x64 | 0 | svchost.exe | NT AUTHORITYLOCAL SERVICE | C:Windowssystem32svchost.exe |

| 852 | x64 | 0 | spoolsv.exe | NT AUTHORITYSYSTEM | C:WindowsSystem32spoolsv.exe |

| 964 | x64 | 0 | taskhost.exe | Admin-PCAdmin | C:Windowssystem32taskhost.exe |

| 1004 | x64 | 1 | dwm.exe | Admin-PCAdmin | C:Windowssystem32Dwm.exe |

| 1044 | x64 | 1 | svchost.exe | NT AUTHORITYLOCAL SERVICE | C:Windowssystem32svchost.exe |

| 1052 | x64 | 0 | explorer.exe | Admin-PCAdmin | C:WindowsExplorer.EXE |

| 1064 | x64 | 1 | svchost.exe | NT AUTHORITYNETWORK SERVICE | C:WindowsSystem32svchost.exe |

| 1120 | x64 | 0 | svchost.exe | NT AUTHORITYLOCAL SERVICE | C:WindowsSystem32svchost.exe |

| 1188 | x64 | 0 | UI0Detect.exe | NT AUTHORITYSYSTEM | C:Windowssystem32UI0Detect.exe |

| 1344 | x64 | 0 | svchost.exe | NT AUTHORITYNETWORK SERVICE | C:Windowssystem32svchost.exe |

| 1836 | x64 | 0 | taskhost.exe | NT AUTHORITYLOCAL SERVICE | C:Windowssystem32taskhost.exe |

| 1972 | x64 | 0 | taskhost.exe | Admin-PCAdmin | C:Windowssystem32taskhost.exe |

| 508 | x64 | 1 | WinSAT.exe | Admin-PCAdmin | C:Windowssystem32winsat.exe |

| 828 | x64 | 1 | conhost.exe | Admin-PCAdmin | C:Windowssystem32conhost.exe |

| 652 | x64 | 1 | unsecapp.exe | NT AUTHORITYSYSTEM | C:Windowssystem32wbemunsecapp.exe |

| 684 | x64 | 0 | WmiPrvSE.exe | NT AUTHORITYSYSTEM | C:Windowssystem32wbemwmiprvse.exe |

| 2712 | x64 | 0 | conhost.exe | Admin-PCAdmin | C:Windowssystem32conhost.exe |

| 2796 | x64 | 1 | svchost.exe | NT AUTHORITYSYSTEM | C:WindowsSystem32svchost.exe |

| 2852 | x64 | 0 | svchost.exe | NT AUTHORITYSYSTEM | C:WindowsSystem32svchost.exe |

| 2928 | x64 | 0 | svchost.exe | NT AUTHORITYSYSTEM | C:WindowsSystem32svchost.exe |

Textual data also makes it hard to describe differences between two process lists, how would you describe the differences between the process lists on different hosts?

A solution to this problem already exists – we can describe a process list numerically. Looking at the process list above, we can derive some simple numerical data:

- There are 33 processes

- The ratio of processes to users is 8.25

- There are 4 observable users

By describing items numerically, we can start to analyze differences, rank, and categorize items. Let’s add a second process list.

| Process List A | Process List B | |

|---|---|---|

| Process Count | 33 | 157 |

| Process Count/Users | 8.25 | 157 |

| User Count | 4 | 1 |

Viewing the numerical descriptions side-by-side reveal clear differences between each process list. We can now measure a process list on any given host, without knowing exactly what processes are running. So far, that doesn’t seem so useful, but with the knowledge that Process List A is a sandbox, and Process List B is not, we can examine the four new process lists below. Which of them are sandboxes?

| Process List C | Process List D | Process List E | Process List F | |

|---|---|---|---|---|

| Process Count | 30 | 84 | 195 | 34 |

| Process/User | 7.5 | 84 | 195 | 8.5 |

| User Count | 4 | 1 | 1 | 4 |

How might we figure this out? Our solution was to sum the values of each column, then calculate the average of the host totals. For each host total, below average values are marked as 1 for a sandbox, and above average values are marked as 0 for a normal host.

| A | B | C | D | E | F | ||

|---|---|---|---|---|---|---|---|

| Process Count | 33 | 157 | 30 | 84 | 195 | 34 | |

| Process Count/User | 8.25 | 157 | 7.5 | 84 | 195 | 8.5 | |

| User Count | 4 | 1 | 4 | 1 | 1 | 4 | Host Score Average |

| Host Total | 59.25 | 315 | 54.5 | 226 | 480 | 65.5 | 168.04 |

| Sandbox Score | 1 | 0 | 1 | 0 | 0 | 1 |

Our solution seems to work out well, however, it was completely arbitrary. It’s likely that before working through our solution, you had already figured out which process lists were sandboxes. Not only did you correctly categorize the process lists, but you did so without textual data against four process lists you’ve never seen! Using the same data points, we can use machine learning to correctly categorize a process list.

Machine Learning for Lowly Operators

The mathematic techniques used in machine learning attempt to replicate human learning. Much like the human brain has neurons, synapses, and electrical impulses that are all connected; artificial neural networks have nodes, weights, and an activation function that are all connected. Through repetition and making small adjustments between each iteration, both humans and artificial neural networks are able to adjust in order to get closer to an expected output. Effectively, machine learning attempts to replicate your brain with math. Both networks operate in a similar fashion as well.

In biology, an electrical impulse is introduced into a neural network, the electrical impulse travels across a synapse, and is processed by a neuron. The strength of the electrical impulse received from the synapse determines whether or not the neuron is activated. Performing the same action repeatedly strengthens the synapses between particular neurons.

In machine learning, an input is introduced into an artificial neural network. The input travels along a link weight into a node where it is passed into an activation function. The output of the activation function determines whether or not the node is activated. By iteratively examining outputs relative to a target value, link weights can be adjusted to reduce the error.

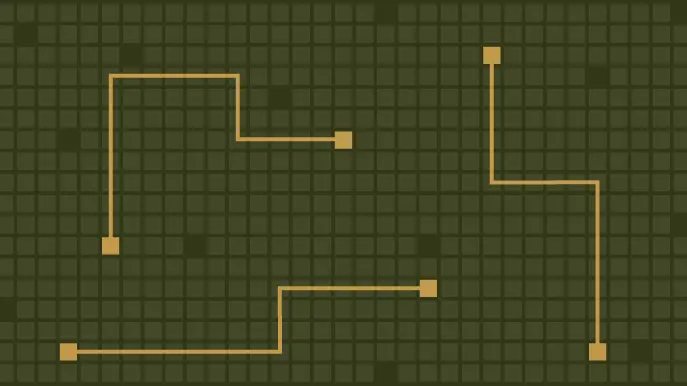

Artificial Neural Networks (ANNs) can have an arbitrary size. The network explored in this post has 3 inputs, 3 hidden layers, and a single output. One thing to notice about the larger ANN is the number of connections between each node. Each connection represents an additional calculation we can perform, both increasing the efficiency, and accuracy of the network. Additionally, as the ANN increases in size, the math doesn’t change (unless you want to get fancy), only the number of calculations.

Gathering and Preparing Data

Gathering a dataset of process lists is relatively easy. Any document with a macro will be executed in a sandbox by any half decent mail filter, and the rest are normal hosts. To get a process list from a sandbox, or a remote system, a macro will need to gather and post a process list back for collection and processing. For processing, the dataset needs to be parsed. The process count, process to user ratio, and unique process count need to be calculated and saved. Finally, each item in the dataset needs to be correctly labelled with either 0 or 1. Alternatively, the macro could gather the numerical data from the process list and post the results back. Choose your own adventure. We prefer to have the raw process list for operational purposes.

There is one more transformation we need to make to the process list data set. Earlier we compared the sum of each process list to the average of each process list total. Using an average in this way is problematic, as very large or very small process list results could adjust the average significantly. Significant shifts would reclassify potentially large numbers of hosts, introducing volatility into our predictions. To help with this, we scale (normalize) the data set. There are a few techniques to do this. We tested all the scaling functions from skikit-learn and chose the StandardScalar transform. What is important here is that overly large or small values no longer have such a volatile effect on classification.

| A | B | C | D | E | F | |

|---|---|---|---|---|---|---|

| Process Count | -0.786 | 1.285 | -0.836 | -0.065 | 1.92 | -0.770 |

| Process Count/User | -0.501 | 1.652 | -0.663 | 0.521 | 0.846 | -0.648 |

| Unique Process Count | 0.812 | -0.902 | 0.813 | -0.902 | -0.902 | 0.813 |

| Label | 1 | 0 | 1 | 0 | 0 | 1 |

Building and Training a Network

The data used in the example above, is pulled from our data set. With it we can start to explore how machine learning can help attackers detect sandboxes. At a high level, in order to successfully train an artificial neural network, we will iteratively:

- Introduce scaled data into the artificial neural network.

- Calculate the output of the activation function.

- Provide feedback to the network in the form of 0 or 1 (its label).

- Calculate the difference between the output and the feedback.

- Update the link weights in an attempt to reduce the difference calculated in step 4.

Some of you may be wondering about step 3. A small, but significant, detail responsible for our earlier success at detecting a sandbox was the fact we told you “Process List A” was a sandbox. From then on, the values of Process List A provided a reference point for everything else. An artificial neural network requires a similar reference point in order to measure how “wrong” it was.

Using skikit-learn, we trained 3 models on 190 unique process lists. The data was scaled in 3 different ways, and even combined. In the end we simply chose the model that performed the best. When the network receives process list data it has never seen before, the network will (hopefully) output an accurate prediction.

The scratchpad code for the neural network can be found here. We opted not to explain all the math involved, although we did write it up. If you have questions feel free to send them our way. Otherwise, if you’re just generally interested, we highly recommend Tariq Rashids book, “Make your own neural network”.

Weaponizing An Artificial Neural Network

Time to put the ANN to work. To test our proof-of-concept, we wrote a simple macro that:

- Collected a process list

- Calculated the inputs (process count, process/user count, and user count)

- Posted the values back to our server and ran them through the neural network for a prediction

- If the neural network predicts a normal host, stage code, otherwise do nothing.

Next, we uploaded the malicious document to several online malware scanners (virustotal, malware-analysis, etc) and waited. We executed the macro on a non-sandbox (first highlighted post-back), then after a few minutes 2 different sandboxes executed the macro and posted the calculated values back. Running the post-back values through the neural network provided 3 accurate predictions!

From the predictions additional logic would be used to deploy malware or not. Just to recap what we accomplished,

- Derived numerical values from a process list

- Built a dataset of those values, and properly scaled them

- Trained an artificial neural network to successfully categorize a sandbox, based on the dataset

- Wrote a macro to post-back required values

- Sent in some test payloads and used the post-back values to predict a categorization

Conclusion

Hopefully, this was a good introduction on how attackers can harness the power of machine learning. We were able to successfully classify a sandbox from the wild. Most notably, the checks were not static and harnessed knowledge of every process list in the data set. For style points, the network we created could be embedded in an Excel document, and make the checks client side.

Regardless, of where the ANN we created sits, machine learning will no doubt change the face of offensive security. From malware with embedded networks, to operator assistance, the possibilities are endless (and very exciting).

Credits and Sources

First and foremost, I want to thank Tariq Rashid (@rzeta0) for his book, “Make Your Own Neural Network”. It’s everything you want to know about machine learning, without all the “math-splaining.” Tariq also kindly answered a few questions I had along the way.

Secondly, I would like to thank James McAffrey of Microsoft for checking my math and giving me back some of my sanity.

If this post piqued your interest in machine learning, I highly recommend “Make Your Own Neural Network” as a starting place.

Here are some other links that were helpful to us (if you go down this road):

- https://hmkcode.github.io/ai/backpropagation-step-by-step/

- https://www.khanacademy.org/math/ap-calculus-ab/ab-differentiation-2-new/ab-3-1a/v/chain-rule-introduction

- https://stats.stackexchange.com/questions/162988/why-sigmoid-function-instead-of-anything-else

- https://neuralnetworksanddeeplearning.com/chap1.html

- https://neuralnetworksanddeeplearning.com/chap2.html

Explore more blog posts

Why Changing Pentesting Companies Could Be Your Best Move

Explore strategic decisions on changing pentesting companies. Balance risk, compliance, and security goals with an effective pentesting partner.

Clarifying CAASM vs EASM and Related Security Solutions

Unscramble common cybersecurity acronyms with our guide to CAASM vs EASM and more to enhance attack surface visibility and risk prioritization.

Filling up the DagBag: Privilege Escalation in Google Cloud Composer

Learn how attackers can escalate privileges in Cloud Composer by exploiting the dedicated Cloud Storage Bucket and the risks of default configurations.