How Threat Actors Attack AI – and How to Stop Them

It’s not often that I have the chance to speak to a room full of CISOs, but I was especially excited to present when I recently had this opportunity. I spoke on the trending topic of Gen AI and LLMs, specifically what types of AI security testing CISOs should be looking for when implementing these systems.

AI is something that can no longer be ignored. It’s undergoing rapid expansion, and its adoption for various business purposes is evident. Whether it’s being utilized in the back office, enabling customers in the front office, or engaging in intriguing projects like creating AI that could chat with other AI, the possibilities are endless. These developments can range from amusing demonstrations to scenarios that might instill fear about AI’s potential impact.

Despite the concerns, the challenges posed by AI are manageable, as long as you follow sound strategies and solutions to effectively navigate and harness its power.

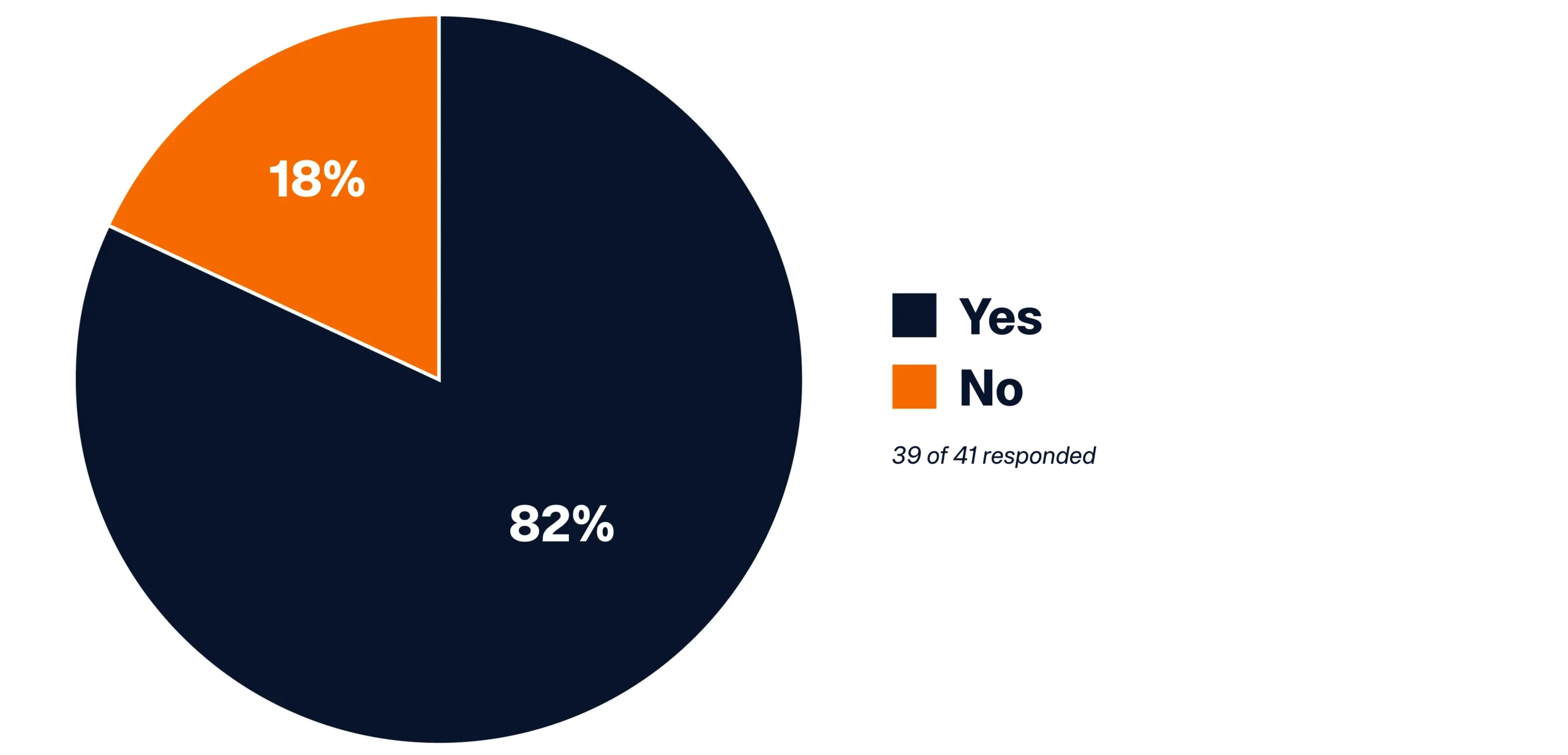

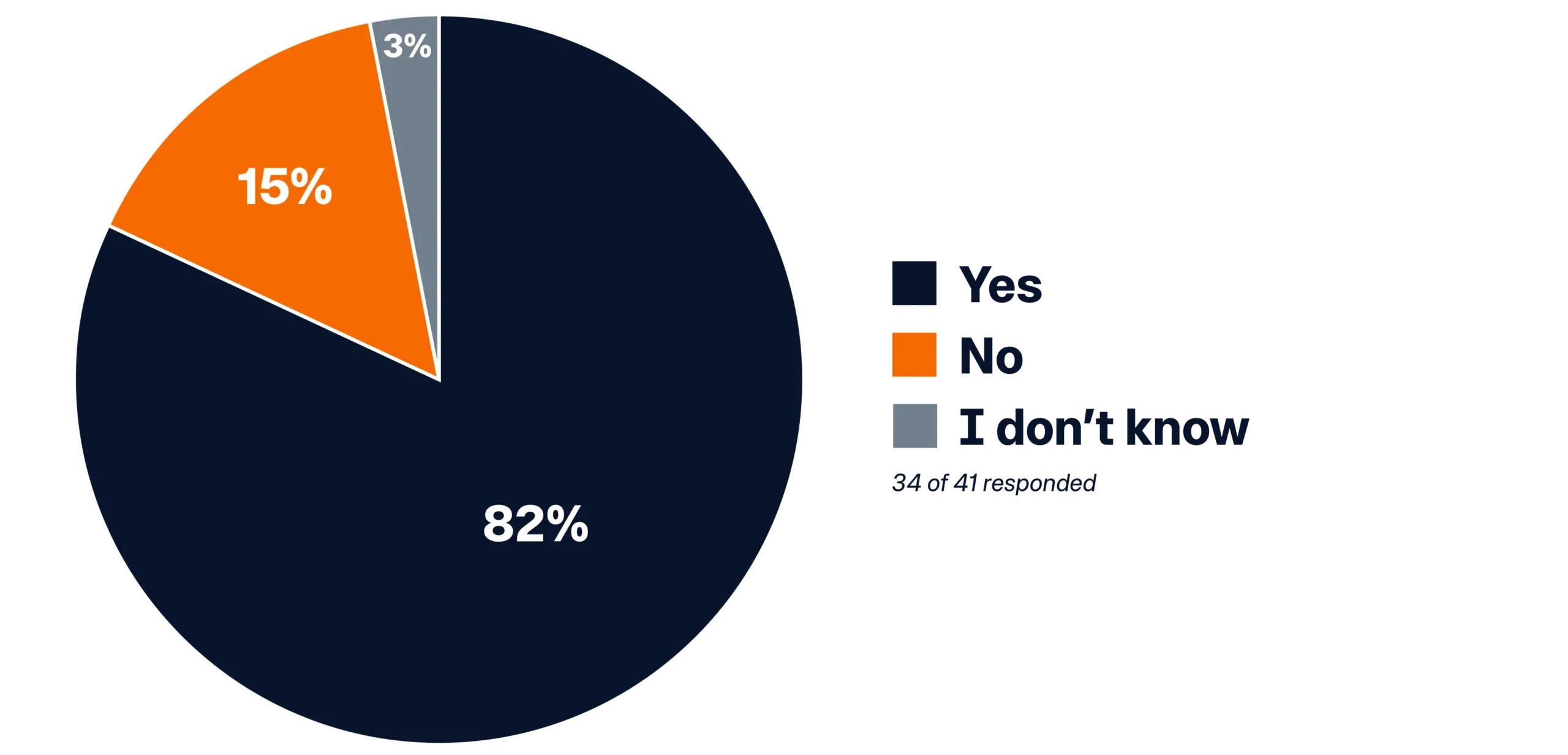

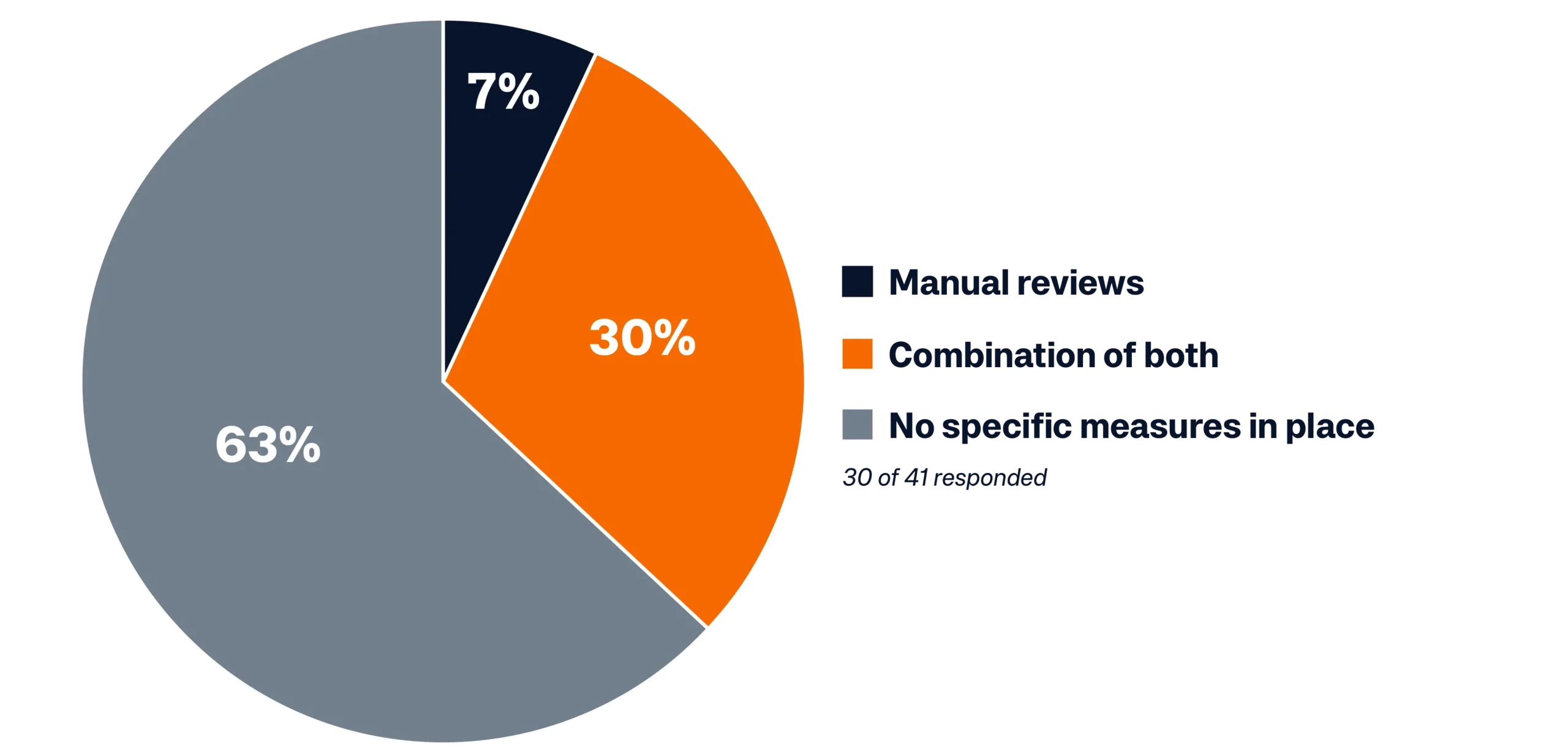

To kick it off, we polled the audience to get a sense of their readiness for AI. Here’s what they said:

Methodology: Consider these survey results to be a pulse check on CISOs’ use of AI in business today. These survey results are based on an average of 34 responses gathered from polling an audience of CISOs or similar roles during a presentation.

- The majority of respondents (82%) said they are already using or planning on using AI as part of their business.

- The majority of respondents (82%) who are implementing AI in their businesses also reported they are training the AI model on their own data. Only 15% of responses said they were not using their own data to train their AI model.

- When it comes to the respondents’ understanding of the origins and integrity of that training data, 47% indicated they were somewhat aware of the origins, but it needed improvement. And 44% said they were not very confident in the data sources. That’s a total of 91% who were unsure about the origins of their data sources, and conversely, only 9% of respondents felt confident about the data they’re training their AI models on.

- Lastly, when looking at measures in place for the quality and consistency of the data used to train AI models, the majority of respondents (63%) said they have no specific measures in place.

So what does this all mean?

Most organizations are trying to use LLMs to enable their businesses to operate at a much faster pace. The key to achieving this is allowing LLMs and generative AI models to learn from their data and leverage it effectively. Clearly, we were all on the same journey here.

As you can see, many people struggle with the quality of their data and its sources. This is a common challenge for businesses, as data constantly grows. Data classification, specifically email classification, is both a problem and a nuisance. Without a systematic approach, data classification isn’t very effective.

The quality and consistency of the data used to train models is another area of concern. While you might currently know the source and quality of your data, maintaining this over the long term without systemic controls could be challenging. Some organizations rely on manual reviews, but these are not continuous, leading to data drift depending on the frequency of these manual processes. My hope is that people feel a little less stressed because we can all see we’re up against the same challenges.

Are you already using (or planning on using) AI as part of your business?

Are you training (or planning on training) the AI model using your own data?

How well do you understand the origins and integrity of the data you plan to use for AI training?

How do you ensure the quality and consistency of the data used for training your AI models?

Security Testing AI and LLMs

When NetSPI conducts AI/ML penetration testing, we divide our testing capabilities into three categories:

1. Machine learning security assessment

Organizations are building models and seeking help with testing them. In this area, we offer a comprehensive assessment designed to evaluate ML models, including Large Language Models (LLMs), against adversarial attacks, identify vulnerabilities, and provide actionable recommendations to ensure the overall safety of the model, its components, and their interactions with the surrounding environment.

2. AI/ML web application penetration testing

This is where most of our AI/ML security testing requests come from. Organizations are paying service providers for access to models, which they then deploy on their networks or integrate into applications through APIs or other means. We receive many requests to help organizations understand the implications of their AI model decisions, whether they are purchasing or integrating them into their solutions.

3. Infrastructure security assessment

This category of AI/ML security testing centers on the infrastructure surrounding your model. Our infrastructure security assessment covers network security, cloud security, API security, and more, ensuring that your company’s deployment adheres to defense in depth security policies and mitigates potential risks.

Testing Methodology in AI Security

At NetSPI, we take a collaborative approach to testing AI models by partnering closely with organizations to understand their models and objective functions. Our goal is to innovate testing techniques that challenge these models to ensure robustness beyond standard benchmarks like OWASP Top 10 for Large Language Model Applications. Here’s how we achieve this:

5 Ways Malicious Actors Attack AI Models

Evasion

One of the most prevalent techniques involves manipulating models designed to detect and decide based on input. By crafting inputs that appear benign to humans but mislead machines, we demonstrate how models can misinterpret data.

Data Poisoning

We scrutinize the training data itself, examining biases and input sources. Understanding how data influences model behavior allows us to detect and mitigate potential biases that could affect model performance.

Data Extraction

Extracting hidden data from models provides insights that may not be readily accessible. This technique helps uncover vulnerabilities and improve model transparency.

Inference and Inversion

Exploring the model’s ability to infer sensitive information from inputs, we uncover scenarios where trained models inadvertently leak confidential data.

Availability Attacks

Similar to denial-of-service attacks on traditional systems, threat actors can target AI models to disrupt their availability. By understanding the underlying mathematical principles, we explore vulnerabilities in model resilience.

It’s crucial to recognize that AI at its core operates on mathematical principles. Everything discussed here – whether attacks or defenses – is grounded in mathematics and programming. Just as models are built using math, they can also be strategically challenged and compromised using math.

The limitless potential of AI for business applications is matched only by its potential for adversarial attacks. As your team explores developing or incorporating ML models, ensuring robust security from ideation to implementation is crucial. NetSPI is here to guide you through this process.

Access our whitepaper, The CISO’s Guide to Securing AI/ML Models, to learn more and join us in enhancing the collective security of AI.

Authors:

Explore More Blog Posts

Extracting Sensitive Information from Azure Load Testing

Learn how Azure Load Testing's JMeter JMX and Locust support enables code execution, metadata queries, reverse shells, and Key Vault secret extraction vulnerabilities.

3 Key Takeaways from Continuous Threat Exposure Management (CTEM) For Dummies, NetSPI Special Edition

Discover continuous threat exposure management (CTEM) to learn how to bring a proactive approach to cybersecurity and prioritize the most important risks to your business.

How Often Should Organizations Conduct Penetration Tests?

Learn how often organizations should conduct penetration tests. Discover industry best practices, key factors influencing testing frequency, and why regular pentesting is essential for business security.